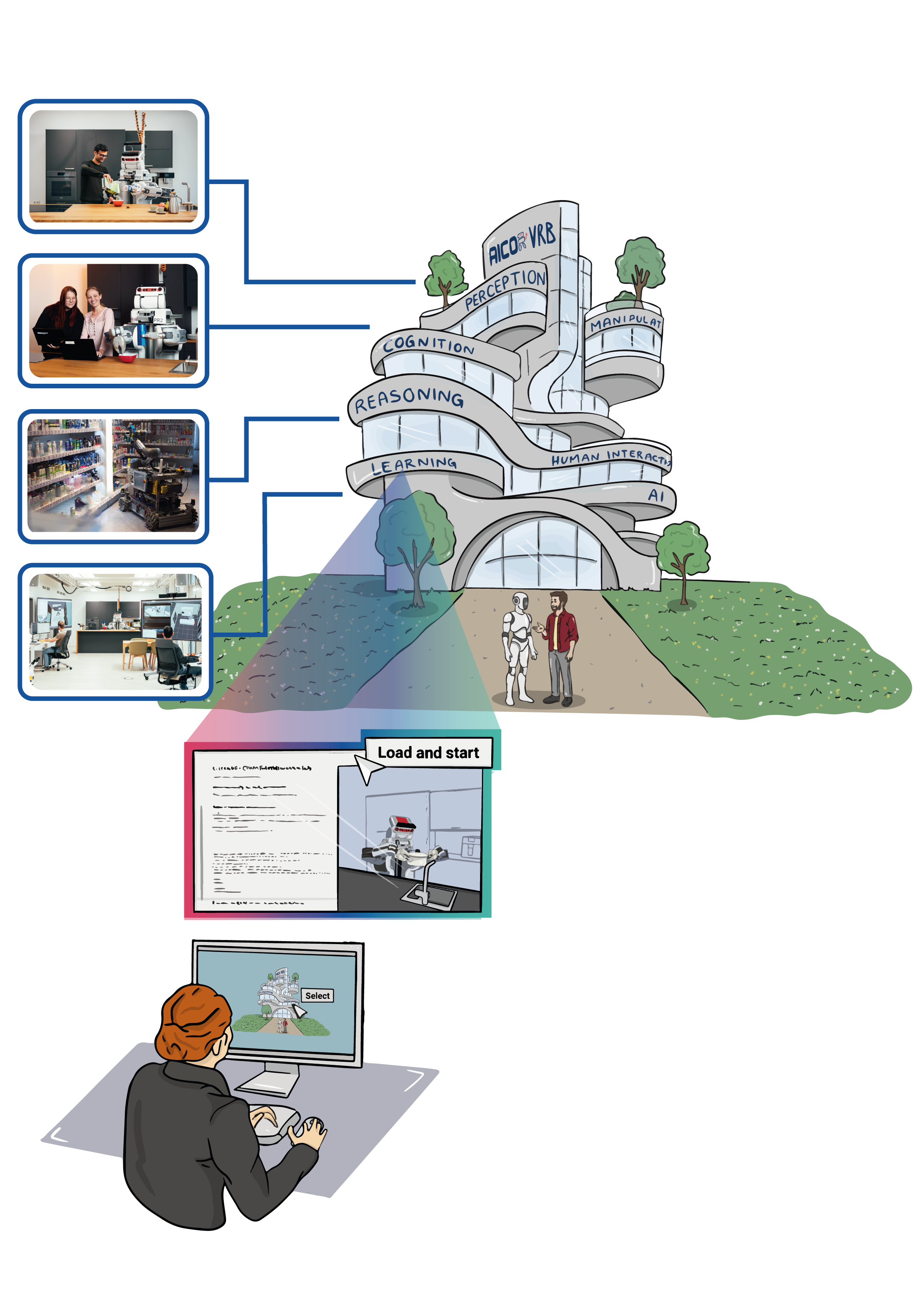

The lecture course ”Robot Programming with ROS” offers an immersive and practical approach to learning the intricacies of programming robots using the Robot Operating System (ROS). Set within the innovative context of virtual research building laboratories, this course provides students with a unique opportunity to apply theoretical concepts in a simulated real-world environment. The course materials, including exercise sheets and programming environments, are readily accessible on GitHub, allowing students to dive into practical, hands-on exercises that significantly enhance their learning experience. This deliberate integration of practical examples into the curriculum is designed to seamlessly connect theoretical knowledge with real-world application, equipping students with the necessary skills and confidence to tackle the challenges of robot programming in various professional settings. Through this course, learners are not just exposed to the fundamentals of ROS but are also prepared to navigate and innovate within the evolving landscape of robotics technology.

Dynamic Retail Robotics Laboratory

This laboratory focuses on addressing the complex challenges robots face within retail settings. Robots in this lab can autonomously deploy themselves in retail stores and constantly adapt to changing retail environments, including shelf layouts and product placements. They are trained to manage inventory, guide customers, and integrate real-time product information from various sources into actionable knowledge. Our goal is to develop robots that not only support shopping and inventory tasks but also seamlessly adjust to new products and store layouts, enhancing customer service and operational efficiency in the retail ecosystem.

In this laboratory, you are provided with two versatile robot action plans tailored for retail environments. The first plan focuses on creating semantic digital twins of shelf systems in retail stores, while the second is designed for restocking shelves. You have the flexibility to choose the specific task, robot, and environment. Once selected, you can execute the action plan through a software container, streamlining the process of implementing these robotic solutions in real-world retail settings.

Lecture Course: Actionable knowledge representation

The lecture course ”Actionable Knowledge Representation” delves into the sophisticated realm of making abstract knowledge actionable in the perception-action loops of robot agents. The course utilizes the advanced resources of the AICOR virtual research building. This includes leveraging the comprehensive knowledge bases of the KnowRob system, the interactive capabilities of the web-based knowledge service openEASE, and the practical scenarios provided by virtual robot laboratories. The course is designed to explore the methodologies of representing knowledge in a form that is both machine-understandable and actionable, focusing on the acquisition of knowledge from diverse sources such as web scraping and the integration of various knowledge segments. It addresses the critical aspects of reasoning about knowledge and demonstrates how this knowledge can be utilized by different agents — ranging from websites and AR applications to robots — to assist users in their daily activities. The practical component of the course is facilitated through platform-independent Jupyter notebooks based on Python, ensuring accessibility and minimal software requirements for all participants. With course materials hosted on GitHub, students are provided with an accessible and comprehensive learning experience that bridges the gap between theoretical knowledge representation concepts and their practical applications in enhancing daily life through technology.

euROBIN Demo

TIAGo robot in the IAI Bremen apartment laboratory.

Multiverse Labs

The Multiverse Framework, supported by euROBIN, is a decentralized simulation framework designed to integrate multiple advanced physics engines along with various photo-realistic graphics engines to simulate everything. The Interactive Virtual Reality Labs, utilizing Unreal Engine for rendering (optimized for Meta Quest 3 Headset) and MuJoCo for physics computation, support the simultaneous operation of multiple labs and enable real-time interaction among multiple users.

Automated Behaviour-Driven Acceptance Testing of Robotic Systems

This simulation setup introduces a structured method for testing whether robot behaviors meet defined acceptance criteria (AC), using a pick-and-place task in a simulation environment. Instead of relying on manual evaluations or ambiguous rulebooks, we apply Behaviour-Driven Development (BDD), a technique from software engineering that describes expected behavior in the form Given–When–Then scenarios.

In this setup, you’ll use Isaac Sim to run automated acceptance tests on a robotic system. The tests are defined using a domain-specific language and executed against different robot and environment configurations. This allows you to observe how small changes impact performance, making it easier to validate and understand robotic behavior in a systematic way. This tutorial for modelling test scenarios can be referred to write your own acceptance tests.

Chapter 01 - Creating a Semantic Environment

For Entering Chapter one click here: Chapter 1!

Chapter 02 - First Plan - Robot Movement and Perception

For Entering Chapter two click here: Chapter 2!

Chapter 03 - Querying the Knowledge Base System

For Entering Chapter three click here: Chapter 3!

Chapter 04 - Completing the Full Transportation Task

For Entering Chapter four click here: Chapter 4!

Chapter 05 - Create your own LLM assistant

For Entering Chapter five click here: Chapter 5!