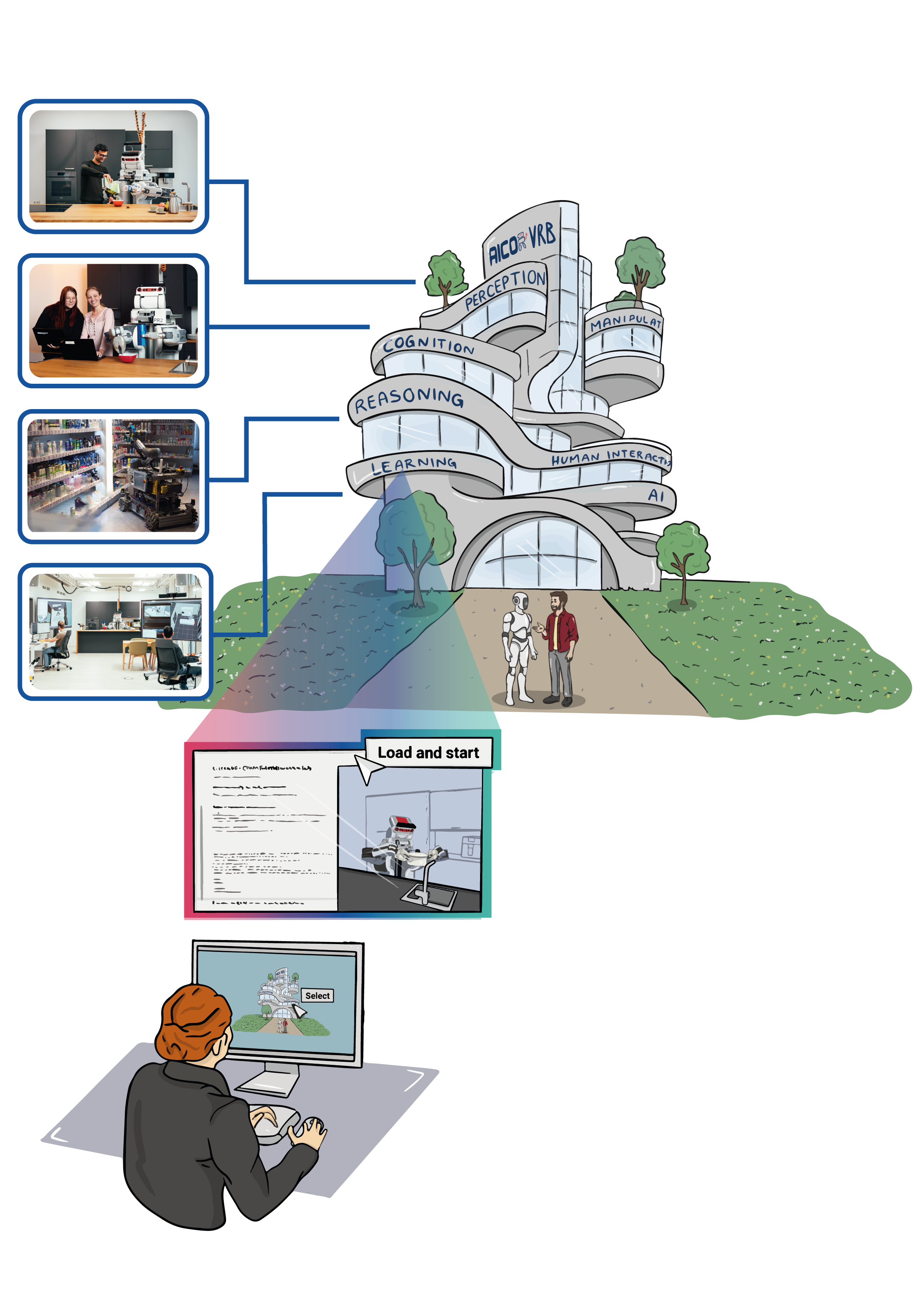

Our Perception Executive Laboratory is centered around RoboKudo, a cutting-edge cognitive perception framework designed specifically for robotic manipulation tasks. Employing a multi-expert approach, RoboKudo excels in processing unstructured sensor data, annotating it through the expertise of various computer vision algorithms. This open-source framework enables the flexible creation and execution of task-specific perception processes by integrating multiple vision methods. The technical backbone of RoboKudo is the Perception Pipeline Tree (PPT), a novel data structure that enhances Behavior Trees with a focus on robot perception. Developed to function within a robot’s perception-action loop, RoboKudo interprets perception task queries, such as locating a milk box in a fridge, and crafts specialized perception processes in the form of PPTs, integrating appropriate computer vision methods to accomplish the tasks at hand.

Description

RoboKudo, an open-source perception framework for mobile manipulation systems, allows to flexibly generate and execute task-specific perception processes that combine multiple vision methods. The framework is based on a novel concept that we call Unstructured Information Management (UIM) on Behavior Trees (BTs), short UIMoBT, which is a mechanism to analyze unstructured data with non-linear process flows. The technical realization is done with a datastructure called Perception Pipeline Tree (PPT), which is an extension of Behavior Trees with a focus on robot perception. RoboKudo is developed to be included in a perception-action loop of a robot system. The system can state perception tasks to RoboKudo via a query-answering interface. The interface translates a perception task query such as “find a milk box in the fridge” into a specialized perception process, represented as a PPT, which contains a combination of suitable computer vision methods to fulfill the given task.

Overview video

Our overview video presents the key ideas of the RoboKudo framework and highlights demonstrations and experiments implemented in RoboKudo. The video contains sound for narration.

Interactive Actions and/or Examples

Publications

- P. Mania; S. Stelter; G. Kazhoyan; M. Beetz, “An Open and Flexible Robot Perception Framework for Mobile Manipulation Tasks” 2024 IEEE International Conference on Robotics and Automation(ICRA). IEEE, 2024. Accepted for publication.

- P. Mania; F. K. Kenfack; M. Neumann; M. Beetz, “Imagination-enabled robot perception.” 2021 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS). IEEE, 2021.