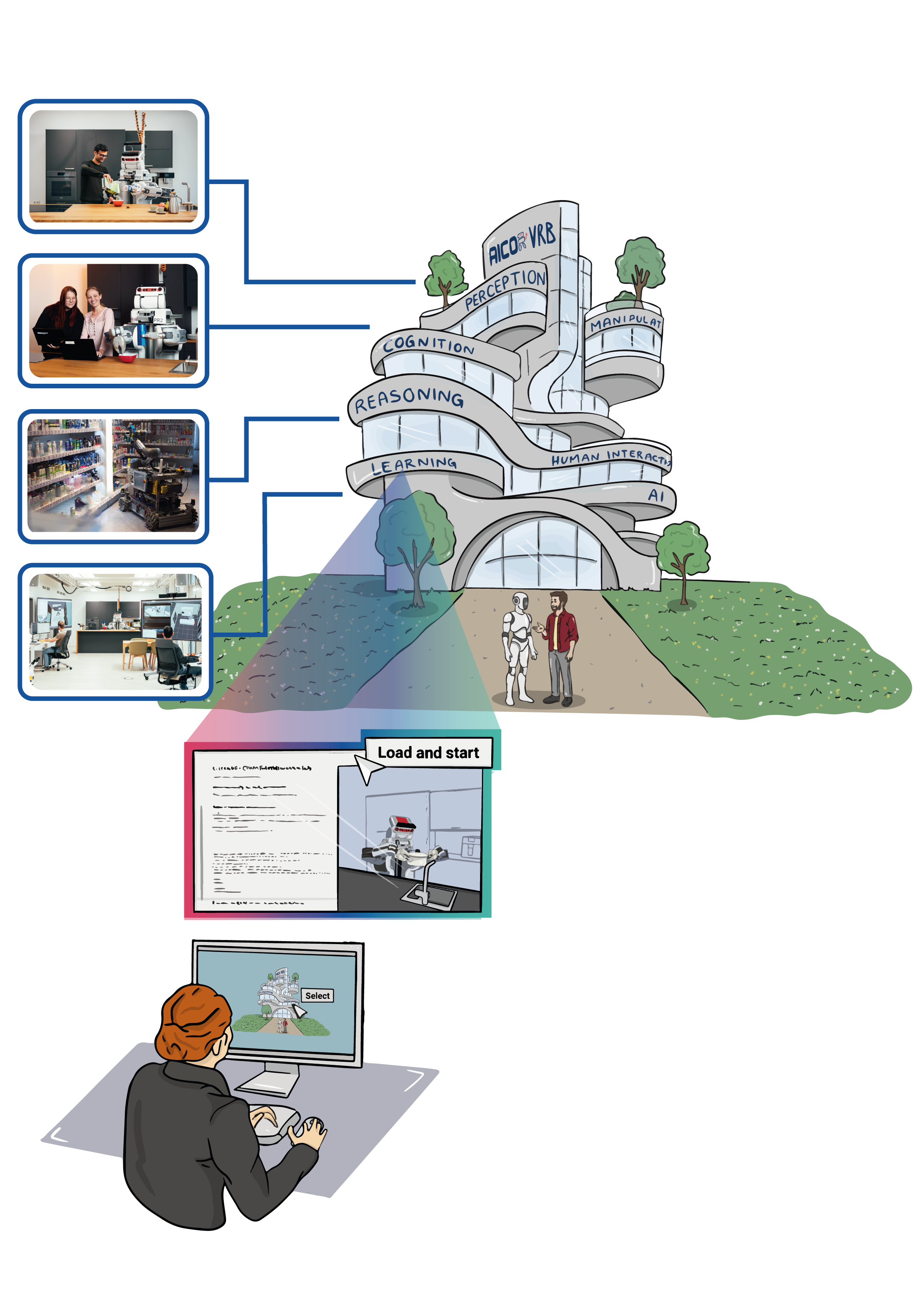

In CRAM Plan Executive Laboratory, we delve into the innovative capabilities of CRAM – Cognitive Robot Abstract Machine. CRAM is a comprehensive toolbox designed for the development, implementation, and deployment of software on autonomous robots. CRAM stands out by offering an array of tools and libraries that facilitate robot software development, encompassing geometric reasoning and rapid simulation mechanisms. These features are pivotal for creating cognition-enabled control programs that significantly enhance robot autonomy. Additionally, CRAM includes introspection tools, allowing robots to reflect on their actions and autonomously refine their strategies for improved performance. Developed primarily in Common Lisp with elements of C/C++ and integrated within the ROS middleware ecosystem, this laboratory aims to pioneer advancements in cognitive robotics through the sophisticated use of CRAM.

The link below directs you to the CRAM homepage where you can find open-source code, installation instructions, tutorials, and comprehensive documentation for the CRAM software framework.