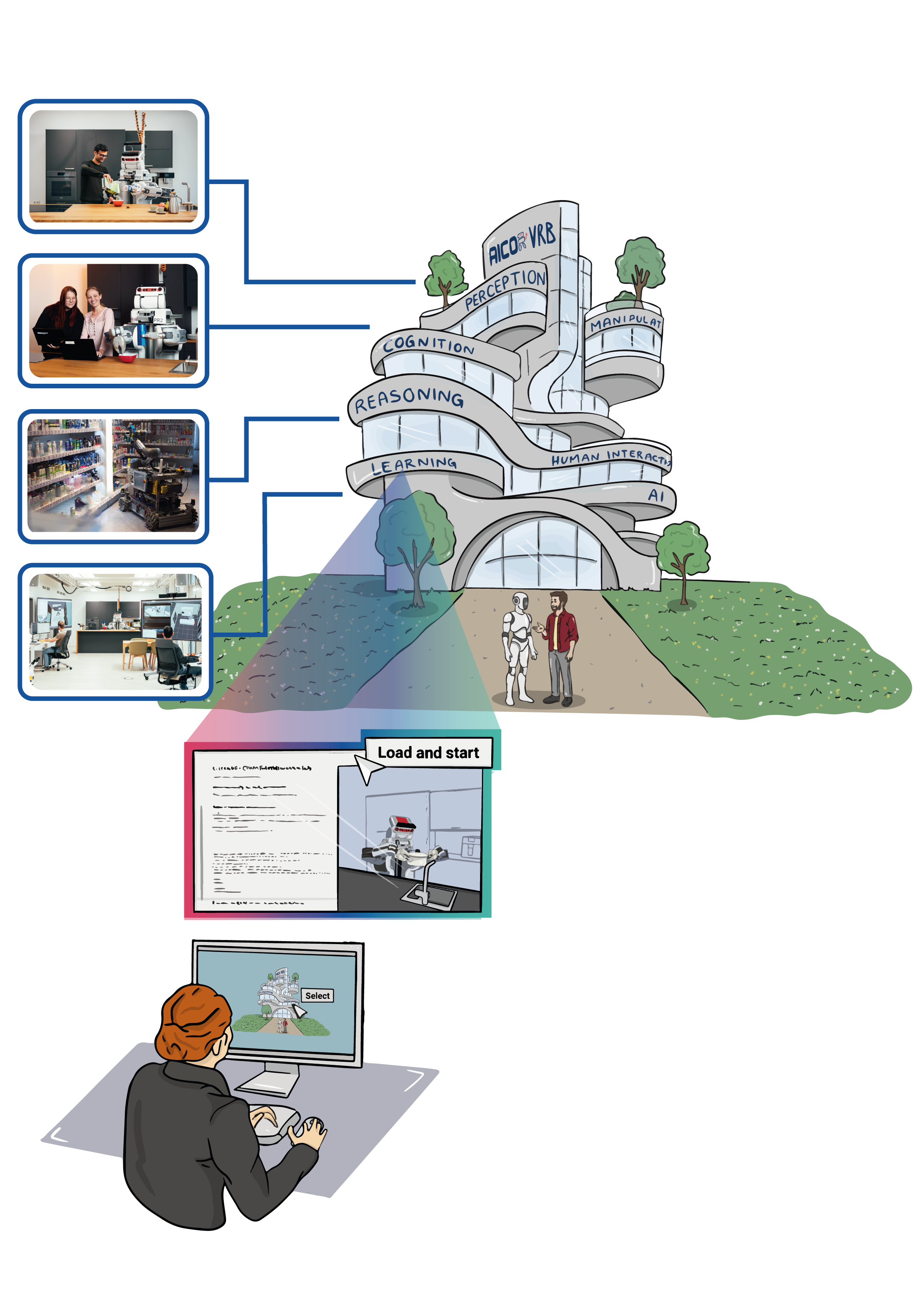

This laboratory is dedicated to advancing the capabilities of robot agents in seamlessly executing object transportation tasks within human-centric environments such as homes and retail spaces. It provides a versatile platform for exploring and refining generalized robot plans that manage the movement of diverse objects across varied settings for multiple purposes. By focusing on the adaptability and scalability of robotic programming, the lab aims to enhance the understanding and application of robotics in everyday contexts ultimately improving their generalizability, transferability, and effectiveness in real-world scenarios.

In the laboratory, you are equipped with a generalized open-source robotic plan capable of executing various object transportation-related tasks, including both table setting and cleaning, across diverse domestic settings. These settings range from entire apartments to kitchen environments and the plan is adaptable to various robots. You can customize the execution by selecting the appropriate environment, task, and robot, and then run it within a software container.

From Recipes to Actions

This virtual lab addresses the challenge of enabling robots to autonomously prepare meals by bridging natural language recipe instructions and robotic action execution. We propose a novel methodology leveraging Actionable Knowledge Graphs (AKGs) to map recipe instructions into core categories of robotic manipulation task which represent specific motion parameters required for task execution.

Please select a recipe below to see the recipe instructions and what actions they are translated to, as well as the action parameters the robot would infer for action execution.

Recipe Details

Select a recipe from the dropdown below to view its details.

With these actions and their parameters, you can instantiate the Action Core Lab below and simulate a robot to perform the action

Action Cores

This laboratory focuses on advancing robotic capabilities in performing core actions such as cutting, mixing, pouring, and transporting within dynamic, human-centered environments like homes.

Actionable Knowledge Graph Laboratory

In this virtual research lab, we aim to empower robots with the ability to transform abstract knowledge from the web into actionable tasks, particularly in everyday manipulations like cutting, pouring or whisking. By extracting information from diverse internet sources — ranging from biology textbooks and Wikipedia entries to cookbooks and instructional websites —, the robots create knowledge graphs that inform generalized action plans. These plans enable robots to adapt cutting techniques such as slicing, quartering, and peeling to various fruits using suitable tools making abstract web knowledge practically applicable in robot perception-action loops.

Show me the plan for the followingShow me the plan for the following

openEASE Knowledge Service Laboratory

openEASE is a cutting-edge, web-based knowledge service that leverages the KnowRob robot knowledge representation and reasoning system to offer a machine-understandable and processable platform for sharing knowledge and reasoning capabilities. It encompasses a broad spectrum of knowledge, including insights into agents (notably robots and humans), their environments (spanning objects and substances), tasks, actions, and detailed manipulation episodes involving both robots and humans. These episodes are richly documented through robot-captured images, sensor data streams, and full-body poses, providing a comprehensive understanding of interactions. The openEASE is equipped with a robust query language and advanced inference tools, enabling users to conduct semantic queries and reason about the data to extract specific information. This functionality allows robots to articulate insights about their actions, motivations, methodologies, outcomes, and observations, thereby facilitating a deeper understanding of robotic operations and interactions within their environments.

In this laboratory, you have access to openEASE, a web-based interactive platform that offers knowledge services. Through openEASE, you can choose from various knowledge bases, each representing a robotic experiment or an episode where humans demonstrate tasks to robots. To start, select a knowledge base—for instance, ”ease-2020-urobosim-fetch-and-place”—and activate it. Then, by clicking on the ”examples” button, you can choose specific knowledge queries to run on the selected experiment’s knowledge bases, facilitating a deeper understanding and interaction with the data.

For Detailed information click here!

Dynamic Retail Robotics Laboratory

This laboratory focuses on addressing the complex challenges robots face within retail settings. Robots in this lab can autonomously deploy themselves in retail stores and constantly adapt to changing retail environments, including shelf layouts and product placements. They are trained to manage inventory, guide customers, and integrate real-time product information from various sources into actionable knowledge. Our goal is to develop robots that not only support shopping and inventory tasks but also seamlessly adjust to new products and store layouts, enhancing customer service and operational efficiency in the retail ecosystem.

In this laboratory, you are provided with two versatile robot action plans tailored for retail environments. The first plan focuses on creating semantic digital twins of shelf systems in retail stores, while the second is designed for restocking shelves. You have the flexibility to choose the specific task, robot, and environment. Once selected, you can execute the action plan through a software container, streamlining the process of implementing these robotic solutions in real-world retail settings.

The Body Motion Problem

The Body Motion Problem (BMP) is a fundamental challenge in robotics, addressing how robots can compute goal-directed, context-sensitive motions to achieve desired outcomes while adapting to real-world constraints. Beyond simple motion generation, BMP requires robots to interpret task goals, infer causal relationships, predict consequences, and dynamically adjust their actions. This makes BMP computationally complex, necessitating a structured approach to problem-solving. To make it tractable, BMP is decomposed into three interdependent subproblems: (1) Identifying Physical Changes required to fulfill a task request, (2) Determining Actions and Forces needed to achieve these changes, and (3) Generating Forces Through Body Motions that execute the necessary movements reliably. By structuring BMP in this way, researchers can systematically analyze and develop solutions that generalize across diverse tasks and environments.

Automated Behaviour-Driven Acceptance Testing of Robotic Systems

This simulation setup introduces a structured method for testing whether robot behaviors meet defined acceptance criteria (AC), using a pick-and-place task in a simulation environment. Instead of relying on manual evaluations or ambiguous rulebooks, we apply Behaviour-Driven Development (BDD), a technique from software engineering that describes expected behavior in the form Given–When–Then scenarios.

In this setup, you’ll use Isaac Sim to run automated acceptance tests on a robotic system. The tests are defined using a domain-specific language and executed against different robot and environment configurations. This allows you to observe how small changes impact performance, making it easier to validate and understand robotic behavior in a systematic way. This tutorial for modelling test scenarios can be referred to write your own acceptance tests.