For Entering Chapter two click here: Chapter 2!

Welcome to the second day of our hands-on course!

Today, you'll dive into the basics of robot movement and perception. You will learn how to navigate the robot to a designated location (like a table) and utilize sensors to detect objects, specifically focusing on a milk carton.Goal: By the end of the session, you will successfully move the robot to a table and implement perception algorithms to identify a milk carton.

Prerequisites

- Finished Chapter 1 and have a basic understanding of URDF.

- Basic understanding of computer vision and OpenCV is beneficial for the perception part of this tutorial, but not required.

Theoretical Background

- We’ll cover the basics of robot movement planning and perception systems.

- You’ll learn about common issues in perception, such as occlusions and the importance of having a clear line of sight for successful detection.

PyCram Framework

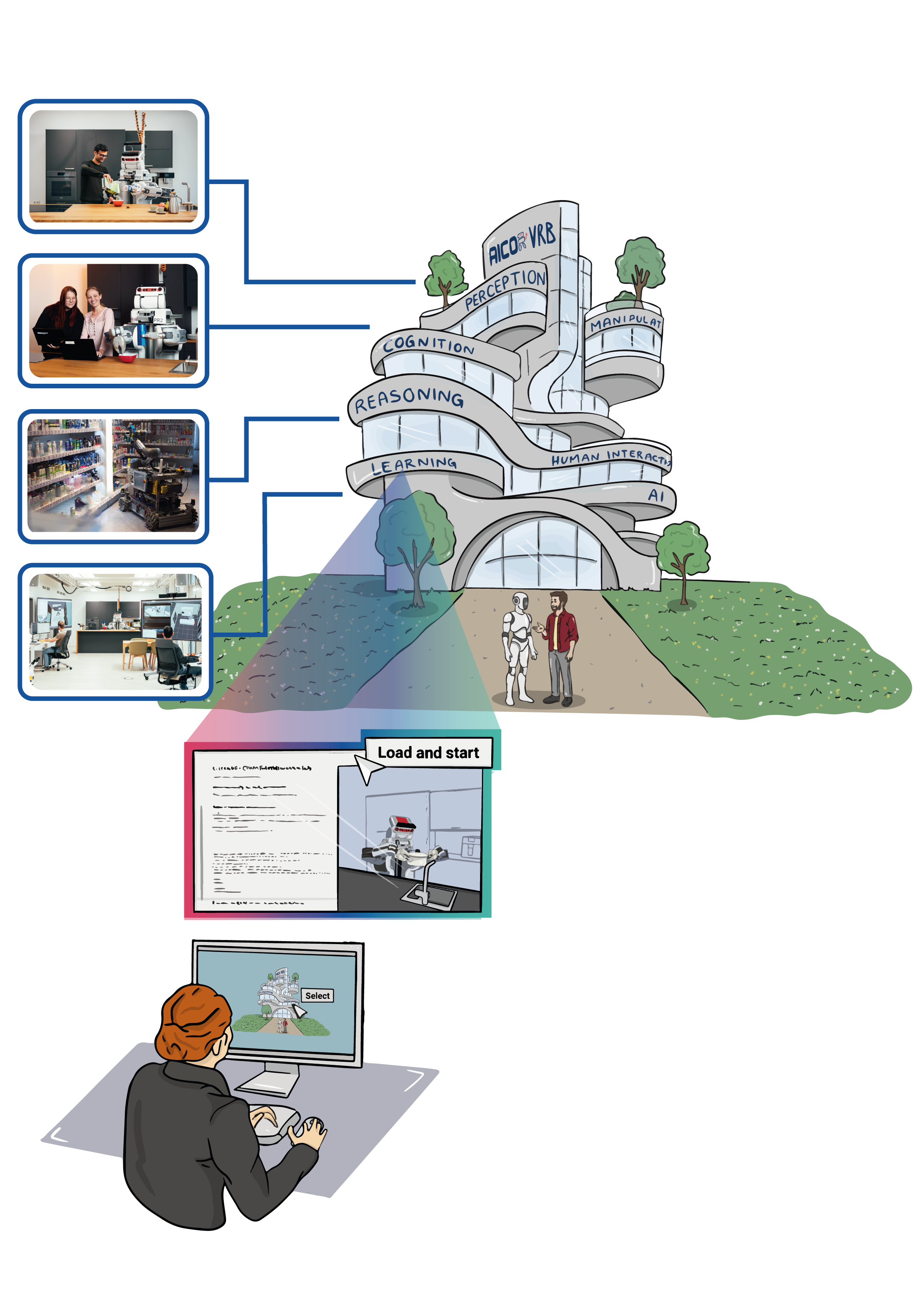

PyCRAM is the Python 3 re-implementation of CRAM. PyCRAM is a toolbox for designing, implementing and deploying software on autonomous robots. The framework provides various tools and libraries for aiding in robot software development as well as geometric reasoning and fast simulation mechanisms to develop cognition-enabled control programs that achieve high levels of robot autonomy. PyCRAM is developed in Python with support for the ROS middleware which is used for communication with different software components as well as the robot.

PyCRAM allows the execution of the same high-level plan on different robot platforms. Below you can see an example of this where the plan is executed on the PR2 and the IAIs Boxy.

For more information on PyCRAM, please visit the PyCRAM Documentation and Github repository here.

Designators

Designators are our way of representing actions, motions, objects and locations.

Specifically in PyCRAM, Designators consist of a description and a specified element. Descriptions describe sets of designators and designators are one thing in the described set. For example, such a description could describe an action where the robot moves to a location from where it can grasp an object. The specific location in this case is not relevant as long as the robot can reach the object. The designator description will be resolved during runtime which results in a designator with specific parameter, the resulting designator can also be performed to let the robot perform the desired behaviour.

You will learn more about that in the following exercises.

Step-by-Step Hands-On Exercises

- Move the Robot: Program the robot to navigate to a predefined location near the table.

- Object Detection: Use a camera sensor to detect and identify the milk carton.

- Understand Object Designators: Learn how to use designators to represent objects.

- Perception Tasks: Learn how our perception executive framework can be adapted to different perception tasks.

Throughout these exercises, we will provide code examples to help you move the robot and utilize computer vision techniques effectively.

Interactive Actions and/or Examples

For Hands-On Exercise 1-3, please use the following Virtual Lab first: Robot Simulation Perception

For Hands-On Exercise 4, please use this Virtual Lab:

RoboKudo Getting Started Lab

Summary

By the end of the session, you’ll have a clearer understanding of basic motion planning and the challenges associated with perception in robotics.

Further Reading/Exercises

- For those interested in the interface between PyCram and RoboKudo, check out the PyCram Documentation.

- RoboKudo is built upon behavior trees. If you want to read more about the general concept of behavior trees, there is an excellent, comprehensive book on arxiv which can be seen here.

- For sensor data processing, Robokudo makes heavy use of OpenCV and Open3D.

- Challenge: Experiment with different sensor configurations to improve the accuracy of the object detection process.

Related Videos

Cram Overview:

RoboKudo Overview: