For Entering Chapter one click here: Chapter 1!

Welcome to the First Chapter of Our Hands-On Course!

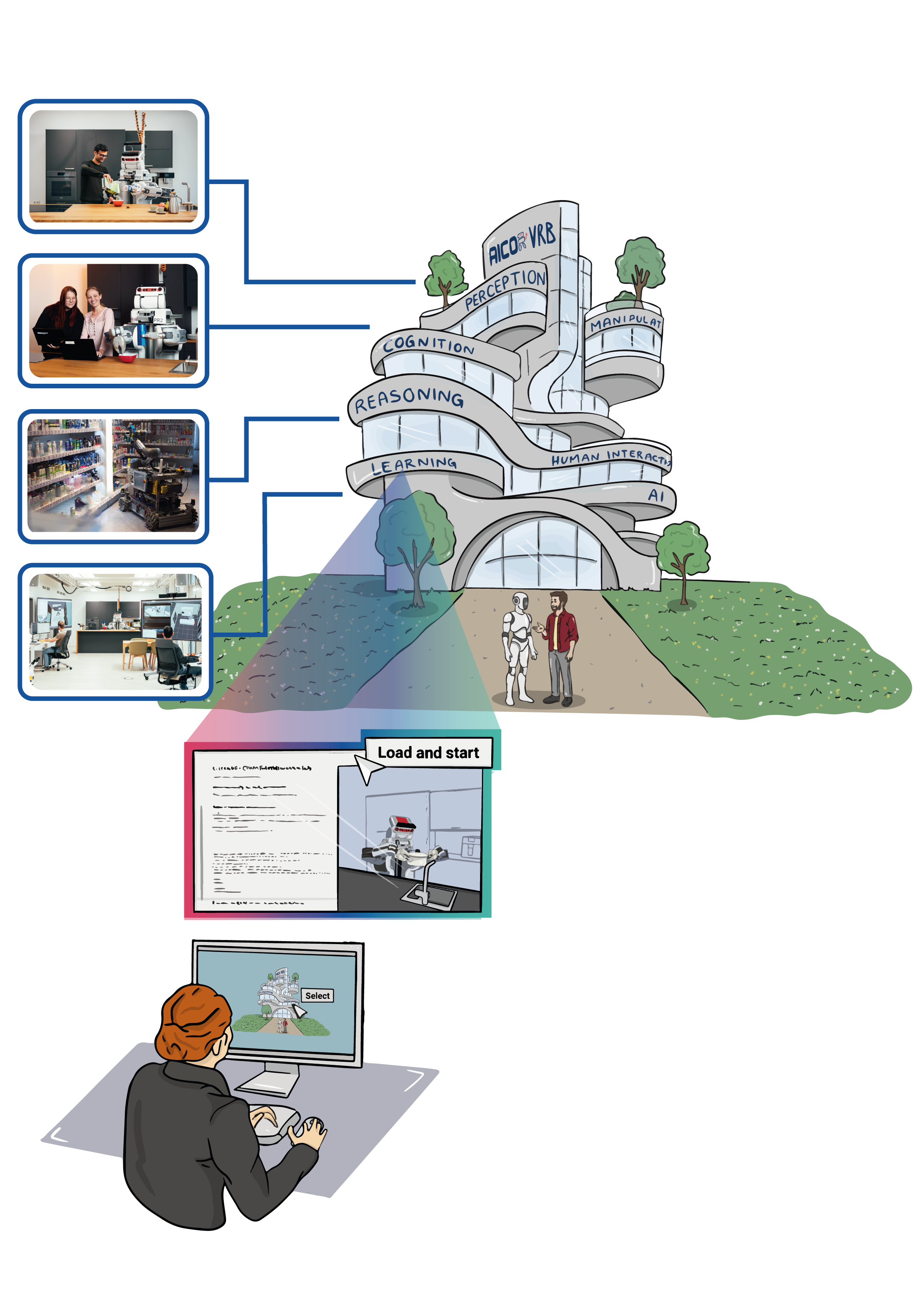

In dynamic robotic environments, scene graphs are a way to represent complex spatial relationships. They act as the backbone of a robot’s understanding, mapping “who is where” and “what connects to what” in complex, dynamic spaces. With scene graphs, robots can navigate, adapt, and interact intelligently, as you’ll see in the video of our lab simulation. This technology is essential for enabling robots to perform tasks in real-world-like settings—think seamless object handling, precise navigation, and real-time adaptability. By additionally linking the scene graph to a semantic knowledge base, robots gain a higher level of contextual awareness, allowing them to reason about the environment, anticipate changes, and make informed decisions, bringing us closer than ever to fully autonomous, perceptive machines.

In this chapter, you’ll learn how to create a scene graph using URDF (Unified Robot Description Format). URDF is essential because it helps define the structure, shape, and physical properties of objects, allowing robots to interact with them accurately in a simulated environment. After creating the scene graph you will extend it with semantic information, creating a semantic digital twin.

Part 1: Introduction to Scene Graphs in URDF

Goal

By the end of this session, you will have worked with a simple URDF model that includes essential objects like a fridge, a table, and other items, and visualized this setup in RVIZ.

Theoretical Background

What is URDF?

URDF stands for Unified Robot Description Format. As the name suggests, it was originally designed for describing robots, specifically their physical structure and properties. A robot is an electromechanical device composed of multiple bodies (also called links) connected by joints. Each link represents a physical part of the robot, and joints define how these parts move relative to each other.

However, URDF is not limited to describing robots. In this course, we use it as a tool for defining and simulating an entire environment as a scene graph. This means we use URDF to model various elements in the environment, such as furniture, objects, and other items with which the robot will interact.

URDF helps create a virtual representation of an environment, as seen in the video above, by defining the structure, shape, and physical properties of the objects involved. This details are crucial for creating realistic interactions in simulations, enabling robots to understand their surroundings and perform tasks effectively.

Links and Joints in URDF

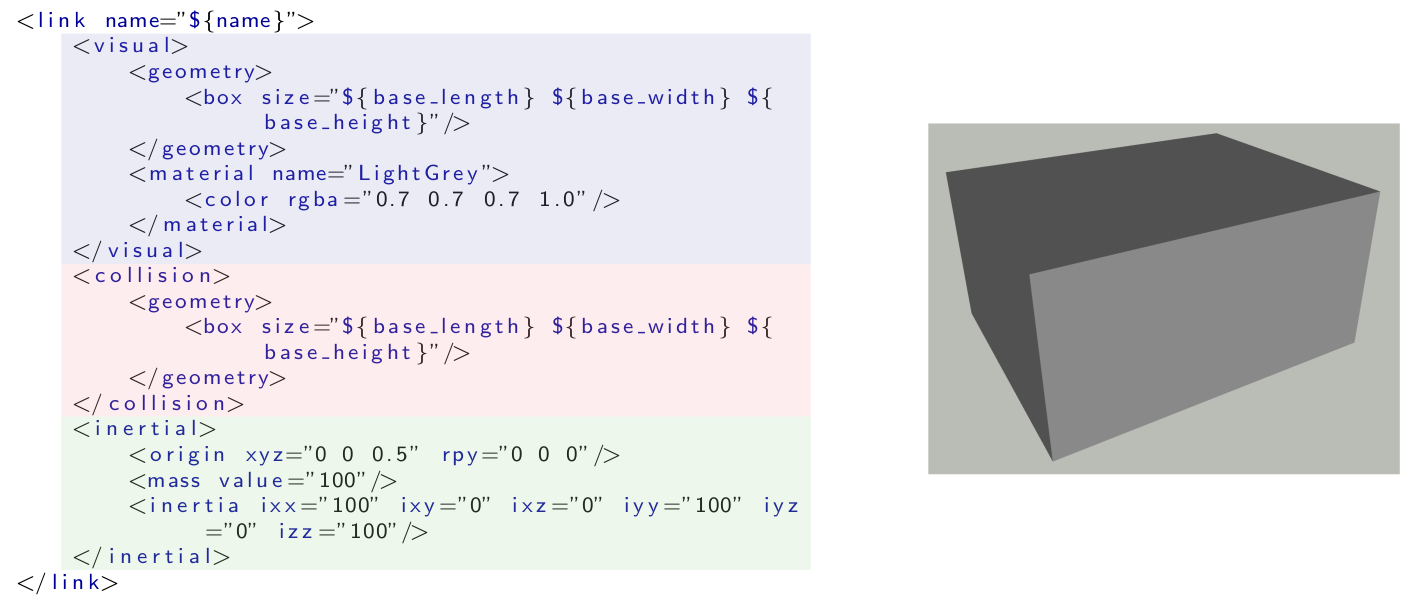

A link in a URDF model represents a physical object in the environment, which could be as simple as a box or as complex as a piece of furniture. A link is defined by several parameters, including:

- Visual Parameter: Defines how the link appears in the simulation.

- Collision Parameter: Defines the simplified shape used to detect collisions, which is generally simpler than the visual parameter to reduce computational load.

- Inertial Parameter: Defines how the link behaves under physical forces, which is important for physics-based simulations like those in Gazebo.

Joints connect different links and define how they move relative to each other. In an environment model, joints can be used to define relationships such as doors on a fridge that can open or the legs of a table attached to its surface.

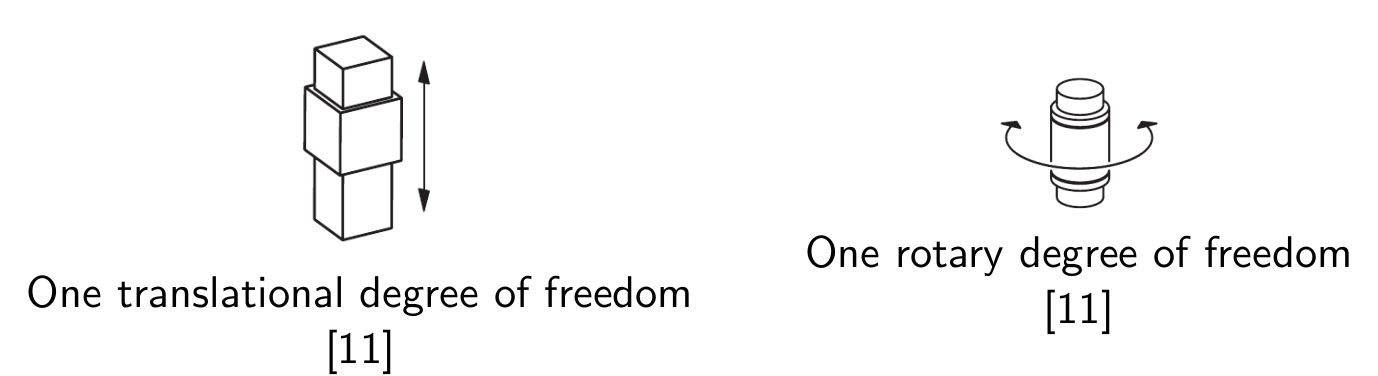

Now, let’s see how two links can be connected. In environmental models, two types of joints are particularly useful for describing how parts of furniture or other items behave. Revolute joints can perform a rotary motion, like a door hinge that allows a fridge door to swing open. Prismatic joints, on the other hand, can perform a linear motion, such as a drawer sliding in and out of a cabinet. These two types of joints are fundamental in modeling dynamic objects in the environment, helping simulate realistic interactions that robots may need to handle effectively. The two following images show examples of prismatic and revolute joints.

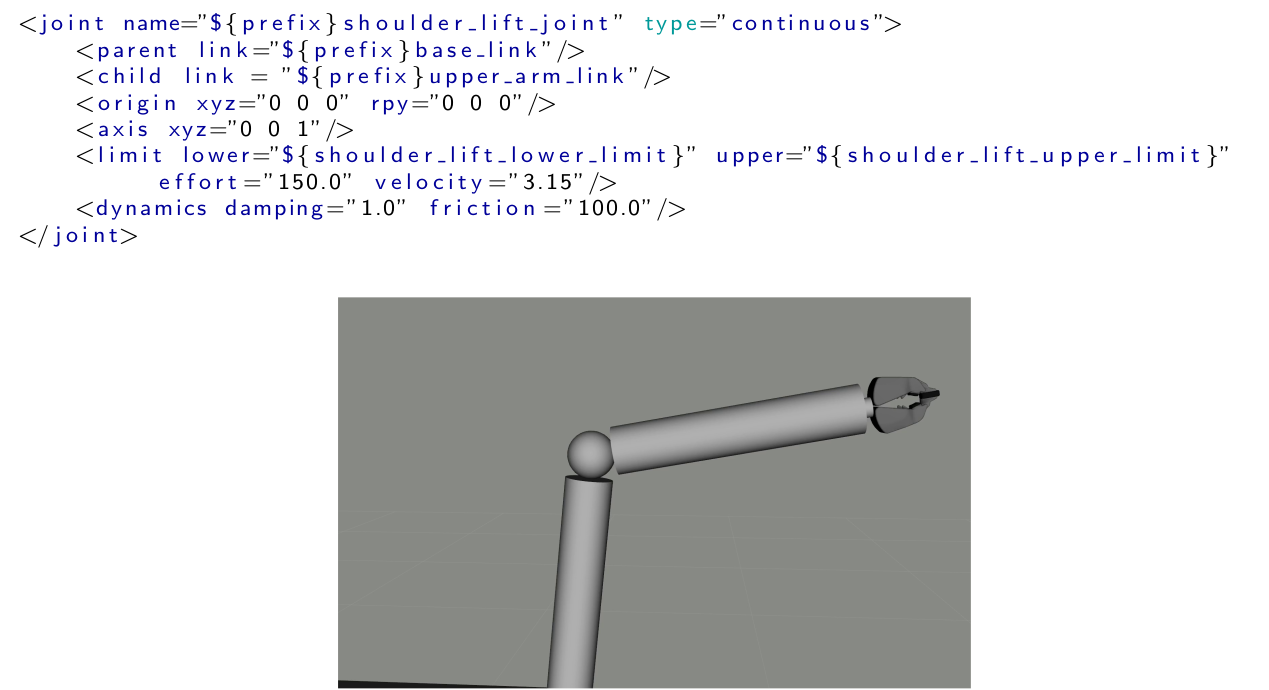

A joint in URDF defines how two links are connected and how they move relative to each other. Each joint requires specific parameters to describe its properties, such as the type of joint (e.g., revolute or prismatic), the axis of movement, and the parent and child links it connects. Additionally, limits can be defined for revolute and prismatic joints to specify the range of motion. The following image will illustrate an example of how a joint is defined in URDF for a robot arm.

Hands-On Exercise 1

Step-by-Step Hands-On Exercises

- Load a Basic URDF File Structure and visualize it

- Add and modifiy Objects in the Scene Graph: Integrate models for the fridge, table, and other essential items into your URDF.

Summary

By the end of the session, you will have a working URDF environment, and you will understand the process of creating and visualizing a scene graph in URDF.

Throughout the exercises, we will provide code examples to demonstrate how to define links, joints, and meshes effectively.

To run the interactive Hands-On Tutorial follow please click this button:

Further Reading/Exercises

For those interested in exploring more, we’ll provide links to additional ROS URDF tutorials and Gazebo documentation.

- A in-depth tutorial for URDF can be found here

- Challenge: Try adding a new object, like a cup, to your environment and adjust its position to fit the scene. This will help reinforce your understanding of how to modify URDF files.

Part 2: Scene Graphs and Knowledge Representation: Semantic Digital Twins

Goal

By the end of this session, you will understand how to extend the scene graph concept to represent knowledge in a semantic digital twin, allowing for richer interaction between the robot and its environment.

Theoretical Background

What is Knowledge Representation for Robotics and Why is it Important?

Knowledge representation in robotics is a way to organize information so that robots can reason about the world around them. It involves encoding the relationships between objects, actions, and properties, allowing robots to interpret and interact with their environment intelligently. Knowledge representation is important because it enables robots to make informed decisions, understand complex instructions, and adapt to dynamic environments, which is especially crucial for autonomous and cognitive robotic tasks. The diagram below shows how a knowledge processing system could look like. The scene graph is connected to the knowledge graph, which is then used by reasoner to answer questions like “Which objects do I need for breakfast?” or “Which objects contain something to drink?”

Knowledge graphs and ontologies are a fundamental tool in knowledge representation, particularly useful for organizing and connecting information about objects, actions, and their relationships in a meaningful way. Ontologies define the core concepts, relationships, and rules in a domain—like what “Grasping” means and the requirements for it. Knowledge graphs, built on these ontologies, hold specific instances and connections, such as a particular milk carton on a table. This structure allows robots to answer targeted questions, like “What objects do I need for breakfast?”. By combining both, robots gain a flexible framework for reasoning, integrating broad concepts with real-time, specific data.

SOMA (Socio-physical Model of Activities) is an example of a robot ontology that integrates both the physical and social aspects of activities to enhance the reasoning capabilities of robotic agents. It enables autonomous robots to interpret and execute everyday tasks by connecting physical actions with socially constructed knowledge. By representing the roles that objects can play, SOMA allows robots to reason about object affordances and activities in a flexible manner. This ontological approach provides a structured framework that helps robots deal with underspecified tasks, similar to how humans use context and experience to fill in gaps, thereby enabling robots to plan and adapt actions effectively to achieve their goals.

What is a Semantic Digital Twin and Why is it Important?

A semantic digital twin is an enriched digital representation of a real-world entity that includes not just physical details, but also semantic information about the relationships, roles, and functions of objects. In robotics, a semantic digital twin allows a robot to understand both the physical properties of objects (e.g., shape and material) and their intended use or role in tasks. This semantic layer is crucial for enabling robots to perform complex tasks involving interactions with multiple objects and adapting to changing environments. By incorporating semantic knowledge, robots can reason more effectively about how to complete a task, making them more capable of handling unpredictable scenarios.

What is USD and How Do We Use it to Create a Knowledge Graph?

Universal Scene Description (USD) is a powerful format for representing complex scenes and environments in 3D. In robotics, USD can be used to create a unified scene graph that integrates data from different sources like URDF, MJCF, and SDF. This standardization into USD makes it easier to enrich the scene with semantic information. Using USD, we can create a semantic map by linking scene graph nodes to concepts in a robot ontology, such as SOMA or KnowRob, effectively transforming a scene graph into a knowledge graph.

The USD file format stores 3D scenes as hierarchical scene graphs (or stages). A stage is composed of different components such as prims, layers, metadata and schemas. So called composition arcs further allow for efficient data sharing and reuse between different parts of a scene. USD also includes a powerful animation system that can be used to store and manage complex animations within a scene. In USD, data is arranged hierarchically into namespaces of prims (primitives). Each prim can hold child prims, as well as attributes and relationships, referred to as properties. Attributes have typed values that can change over time, while relationships are pointers to other objects in the hierarchy, with USD automatically remapping the targets when namespaces change due to referencing. In addition, both prims and properties can have non-time-varying metadata. All of these elements, including prims and their contents, are stored in a layer, which is a scene description container for USD. This hierarchical structure allows for easy organization of the scene, and enables transformations and properties to be inherited from parent prims to their children. This example shows how a cardboard box with two flaps can be represented using a hierarchy of prims in USD.

def Xform "world" () {

def Xform "box" (

prepend apiSchemas = ["PhysicsMassAPI", ...]

) {

matrix4d xformOp:transform = ( ... )

token[] xformOpOrder = ["xformOp:transform"]

point3f physics:centerOfMass = ( ... )

float physics:mass = 2.79

def Cube "geom_1" ( ... ) { ... }

def PhysicsRevoluteJoint "box_flap_1_joint" {

rel physics:body0 = </world/box>

rel physics:body1 = </world/box_flap_1>

}

def PhysicsRevoluteJoint "box_flap_2_joint" {

rel physics:body0 = </world/box>

rel physics:body1 = </world/box_flap_2>

}

}

def Xform "box_flap_1" ( ... ) { ... }

def Xform "box_flap_2" ( ... ) { ... }

}

USD as the Translation Medium for Scene Descriptions

Each simulation software uses its own scene description format: MuJoCo employs MJCF, Unreal Engine uses FBX, Omniverse utilizes USD, and ROS is compatible with URDF. In the previous tutorial, we generated an environment scene description in URDF. Now, we need to convert this URDF scene into USD. For this, we use the Multiverse Parser, which can convert and standardize scenes across various formats. The parser optimizes, standardizes, and translates scenes into USD, making it easier to convert them into other formats when needed.

Translation USD Scene Descriptions into Knowledge Graphs

The translation of a USD scene into a knowledge graph involves three steps. The first step is to establish a USD layer containing class prims representing the TBox ontology. Another layer representing the scene graph imports the TBox USD layer and uses a custom API to tag prims with ontological concepts. The semantic USD scene graph are then translated into the KG.

Hands-On Exercise 2

Step-by-Step Hands-On Exercises

In the following exercise, we want to make use of the represented knowledge. To do so, we will use the KnowRob framework. It allows you to ask questions (“queries”) about the stored knowledge in order to infer required parameters to proceed with higher-level tasks like setting a table for breakfast. This involves for example the reasoning about likely storage locations for breakfast items.

This will only be your first step into KnowRob to get a first idea on how to use it in conjunction with our environment model. A more detailed introduction will be done in Chapter 3.

Semantic Tagging (Optional): To get further information on how USD’s are processed play around in our: Multiverse Knowledge Lab

Query the Knowledge Base:

Reason about the environment model (“semantic map”) in our: KnowRob Lab

Further Reading/Exercises

Explore the concept of Semantic Digital Twins in more detail through provided research papers and tutorials.

A more in-depth tutorial for OWL and RDF can be found here

Publication for the USD scenes translation into knowledge graphs:

Publication for the SOMA ontology:

KnowRob, as a reasoning engine for Knowledge Graphs:

openEASE Knowledge Service Laboratory, can be found here

Challenge: Try creating an OWL from your own environment and find interesting queries!