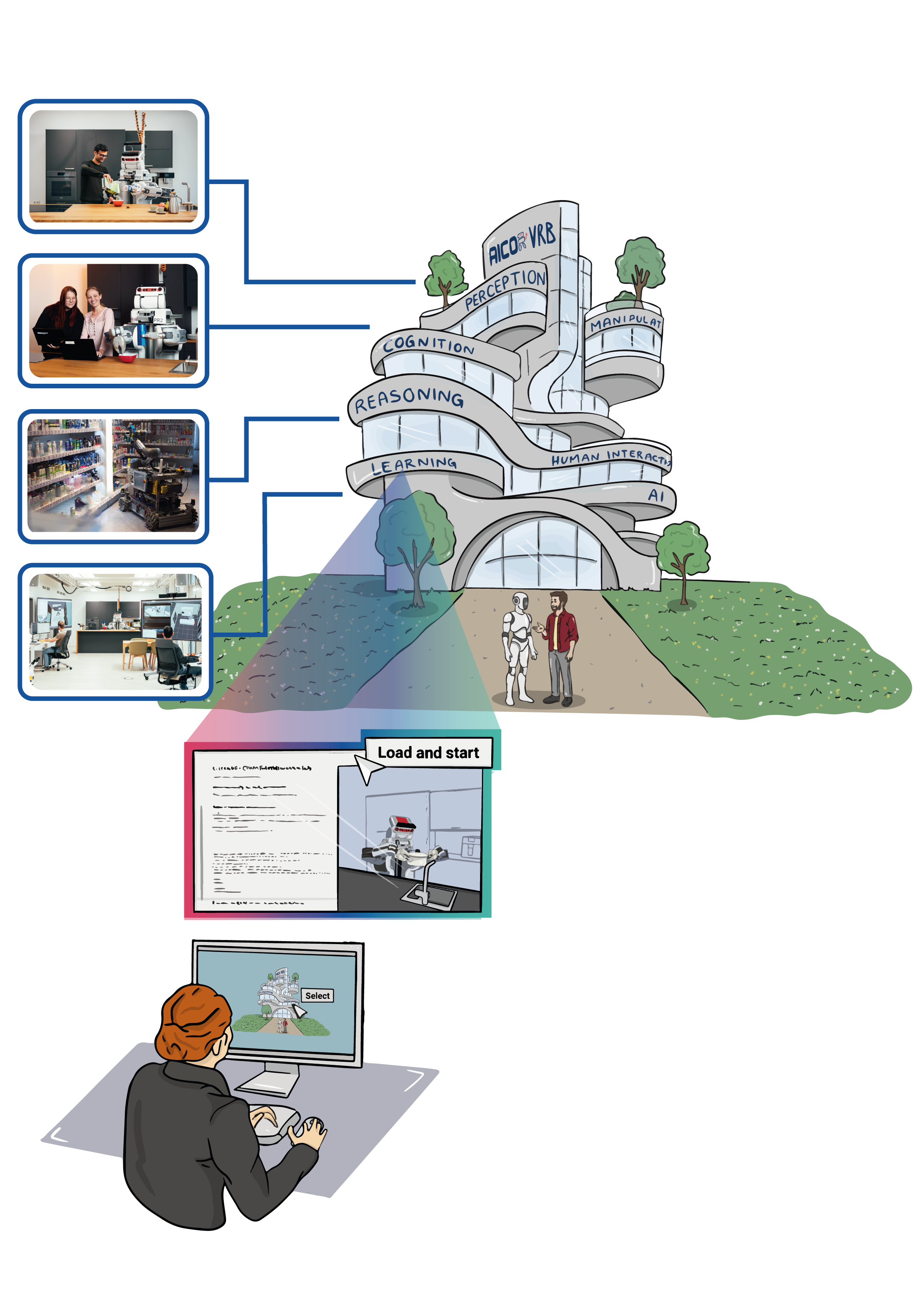

The Interactive Task Learning Lab delves into the forefront of robotics, exploring advanced learning methodologies that empower robots with the ability to not only perform specific tasks, but also to grasp the essence of the tasks themselves. This includes understanding the goals, identifying potential unwanted side effects, and determining suitable metrics for evaluating task performance. Beyond mere execution, this approach necessitates that robots comprehend the physical laws governing their environment, predict the outcomes of their actions, and align their behavior with human expectations. Central to this lab’s research is the dynamic interaction between robots and humans where the human acts as a teacher, imparting knowledge about the conceptual framework of tasks, encompassing both the underlying concepts and their interrelations. Robots are thus challenged to recognize the limits of their knowledge and actively seek assistance, integrating and acting upon advice in a manner that reflects an understanding of its purpose and implications for modifying their actions. This innovative lab not only pushes the boundaries of robot learning but also paves the way for more intuitive and collaborative human-robot interactions.

For more information, you can visit the webpage of Interactive Task Learning to get a better idea on how a robot can learn from different teaching methodologies.

Interactive Actions and/or Examples

Description

ITL is an emerging A.I. challenge, defined as “any process by which an agent improves its performance on some task through experience, when [that experience] consists of a series of sensing, effecting, and communicating interactions between (the agent), its world, and crucially other agents in the world(John Leird, Kevin A. Gluck).” An ITL setup is an apprentice-style learning approach where most aspects of the task can be explicitly taught by an instructor and the student can accumulate task-specific knowledge not only from interactions but also from past experiences to solve the novel task execution problem. We investigate the predominant natural interaction methods employed by humans to instruct each other, which include bootstrap task-specific instruction (”telling what to do”) and demonstration (”showing how to do it”).

Example Videos

VR Human task demonstrations

VR Human task demonstrations as NEEM

PR2 Pouring task demonstrations with PyCRAM